Zero-Reference Deep Curve Estimation (Zero-DCE)

for Low-Light Image Enhancement

2 City University of Hong Kong, Hong Kong

3 Nanyang Technological University, Singapore

4 Beijing Jiaotong University, Beijing, China

Abstract

The paper presents a novel method, Zero-Reference Deep Curve Estimation (Zero-DCE), which formulates light enhancement as a task of image-specific curve estimation with a deep network. Our method trains a lightweight deep network, DCE-Net, to estimate pixel-wise and high-order curves for dynamic range adjustment of a given image. The curve estimation is specially designed, considering pixel value range, monotonicity, and differentiability. Zero-DCE is appealing in its relaxed assumption on reference images, i.e., it does not require any paired or unpaired data during training. This is achieved through a set of carefully formulated non-reference loss functions, which implicitly measure the enhancement quality and drive the learning of the network. Our method is efficient as image enhancement can be achieved by an intuitive and simple nonlinear curve mapping. Despite its simplicity, we show that it generalizes well to diverse lighting conditions. Extensive experiments on various benchmarks demonstrate the advantages of our method over state-of-the-art methods qualitatively and quantitatively. Furthermore, the potential benefits of our Zero-DCE to face detection in the dark are discussed. We further present an accelerated and light version of Zero-DCE, called (Zero-DCE++), that takes advantage of a tiny network with just 10K parameters. Zero-DCE++ has a fast inference speed (1000/11 FPS on single GPU/CPU for an image with a size of 1200*900*3) while keeping the enhancement performance of Zero-DCE.

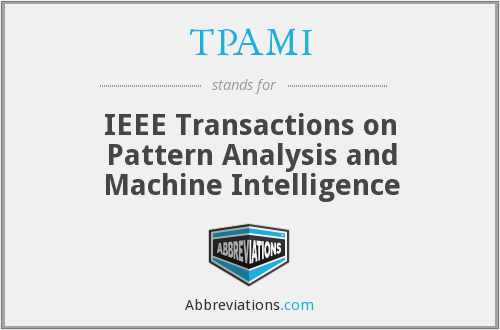

Pipeline

The pipeline of our method. (a) The framework of Zero-DCE. A DCE-Net is devised to estimate a set of best-fitting Light-Enhancement curves (LE-curves: LE(I(x);α)=I(x)+αI(x)(1-I(x))) to iteratively enhance a given input image. (b, c) LE-curves with different adjustment parameters α and numbers of iteration n. In (c), α1, α2, and α3 are equal to -1 while n is equal to 4. In each subfigure, the horizontal axis represents the input pixel values while the vertical axis represents the output pixel values.

Highlights

We propose the first low-light enhancement network that is independent of paired and unpaired training data, thus avoiding the risk of overfitting. As a result, our method generalizes well to various lighting conditions.

We design an image-specific curve that is able to approximate pixel-wise and higher-order curves by iteratively applying itself. Such image-specific curve can effectively perform mapping within a wide dynamic range.

We show the potential of training a deep image enhancement model in the absence of reference images through task-specific non-reference loss functions that indirectly evaluate enhancement quality. It is capable of processing images in real-time (about 500 FPS for images of size 640*480*3 on GPU) and takes only 30 minutes for training.

Results

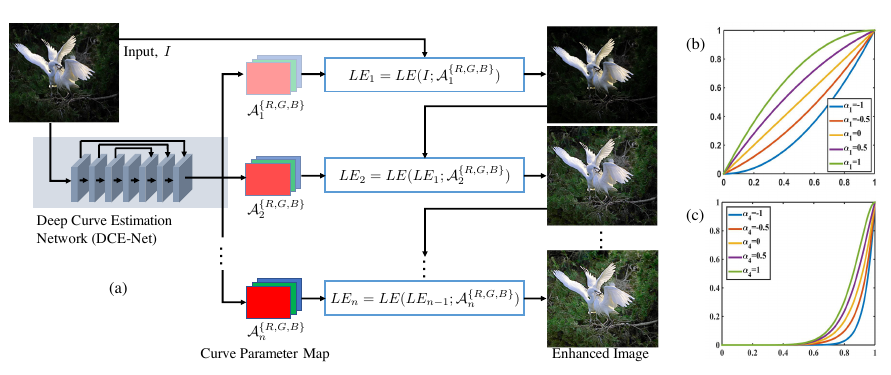

1. Visual Comparisons on Typical Low-light Images

2. Visual Face Detection Results Before and After Enanced by Zero-DCE

3. Real Low-light Video with Variational Illumination Enanced by Zero-DCE

4. Self-training (taking first 100 frames as training data) for Low-light Video Enhancement

Ablation Studies

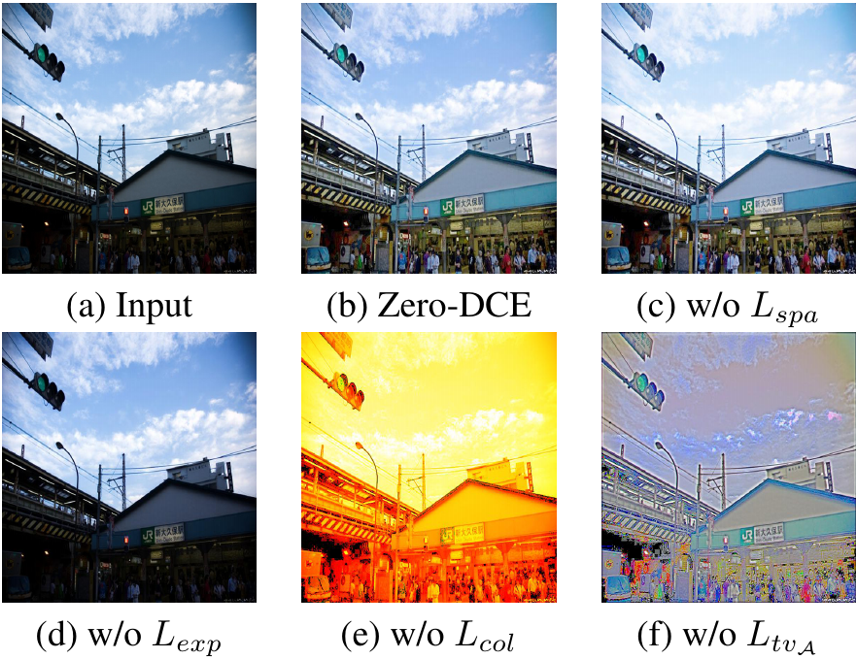

1. Contribution of Each Loss

Ablation study of the contribution of each loss (spatial consistency loss Lspa, exposure control loss Lexp, color constancy loss Lcol, illumination smoothness loss LtvA).

2. Effect of Parameter Settings

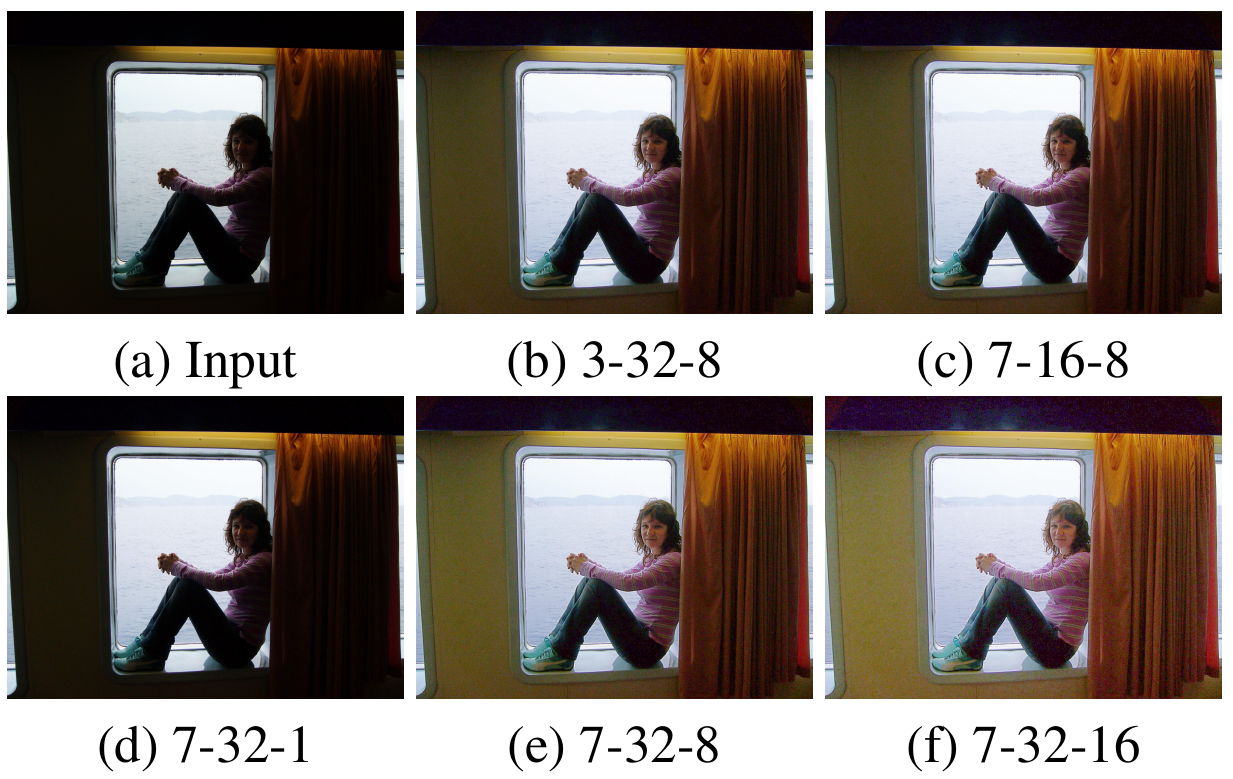

Ablation study of the effect of parameter settings. l-f-n represents the proposed Zero-DCE with l convolutional layers, f feature maps of each layer (except the last layer), and n iterations.

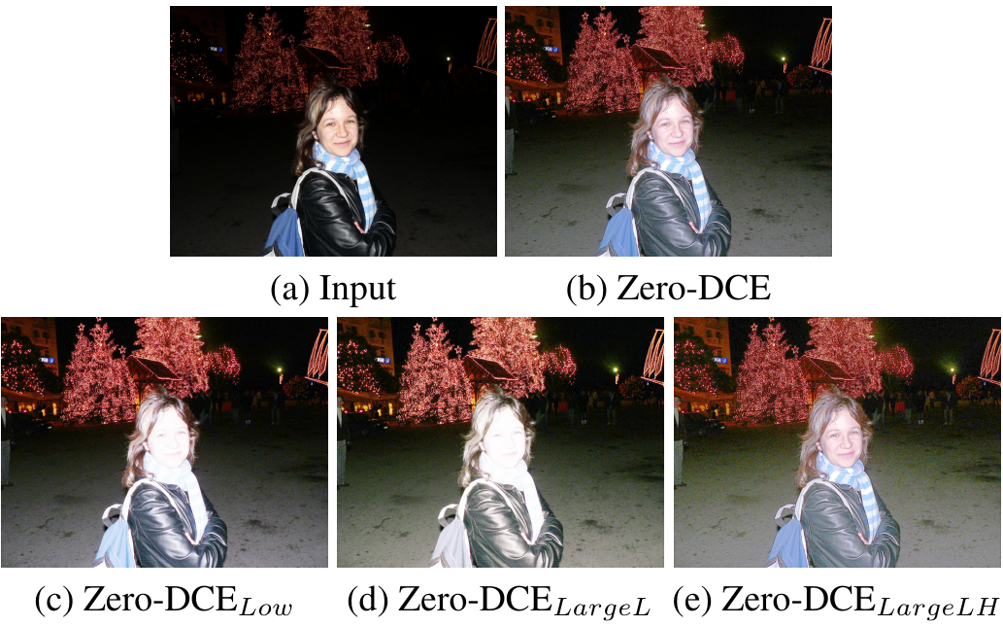

3. Impact of Training Data

To test the impact of training data, we retrain the Zero-DCE on different datasets: 1) only 900 low-light images out of 2,422 images in the original training set (Zero-DCELow), 2) 9,000 unlabeled low-light images provided in the DARK FACE dataset (Zero-DCELargeL), and 3) 4800 multi-exposure images from the data augmented combination of Part1 and Part2 subsets in the SICE dataset (Zero-DCELargeLH).

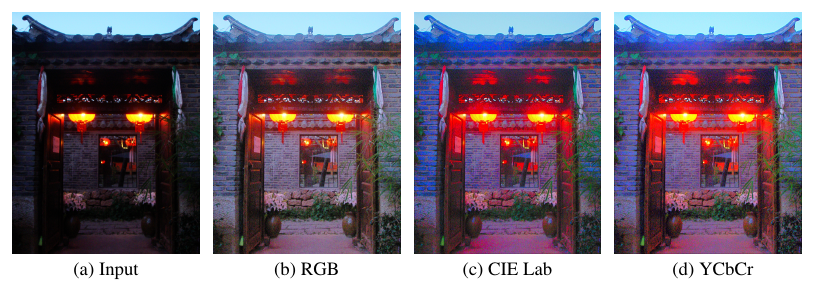

4. Advantage of Three-channel Adjustment

Ablation study of the advantage of three-channel adjustment (RGB, CIE Lab, YCbCr color spaces).

Citation

@Article{Zero-DCE,

author = {Guo, Chunle and Li, Chongyi and Guo, Jichang and Loy, Chen Change and Hou, Junhui and Kwong, Sam and Cong Runmin},

title = {Zero-reference deep curve estimation for low-light image enhancement},

journal = {CVPR},

pape={1780-1789},

year = {2020}

}

@Article{Zero-DCE++,

author = {Li, Chongyi and Guo, Chunle and Loy, Chen Change},

title = {Learning to Enhance Low-Light Image via Zero-Reference Deep Curve Estimation},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence},

pape={},

year = {2021},

doi={10.1109/TPAMI.2021.3063604}

}

Contact

If you have any questions, please contact Chongyi Li at lichongyi25@gmail.com or Chunle Guo at guochunle@tju.edu.cn.