lichongyi25 @ gmail.com

[GitHub]

[DBLP]

[Google Scholar]

Underwater Scene Prior Inspired Deep Underwater Image and Video Enhancement

Abstract:

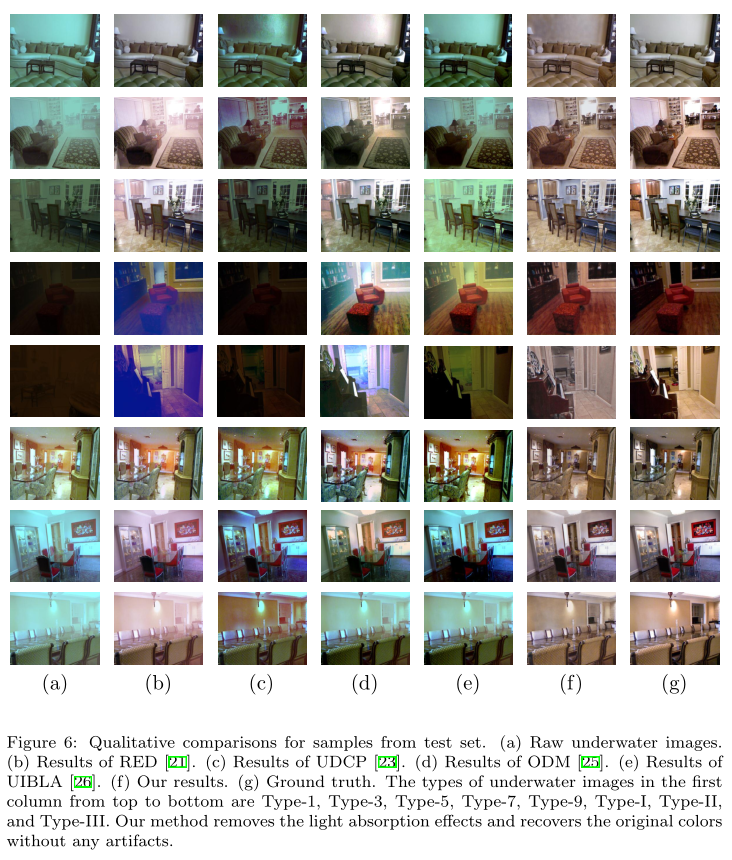

In underwater scenes, wavelength-dependent light absorption and scattering degrade the visibility of images and videos. The degraded underwater images and videos affect the accuracy of pattern recognition, visual understanding, and key feature extraction in underwater scenes. In this paper, we propose an underwater image enhancement convolutional neural network (CNN) model based on underwater scene prior, called UWCNN. Instead of estimating the parameters of underwater imaging model, the proposed UWCNN model directly reconstructs the clear latent underwater image, which benefits from the underwater scene prior which can be used to synthesize underwater image training data. Besides, based on the light-weight network structure and effective training data, our UWCNN model can be easily extended to underwater videos for frame-by-frame enhancement. Specifically, combining an underwater imaging physical model with optical properties of underwater scenes, we first synthesize underwater image degradation datasets which cover a diverse set of water types and degradation levels. Then, a light-weight CNN model is designed for enhancing each underwater scene type, which is trained by the corresponding training data. At last, this UWCNN model is directly extended to underwater video enhancement. Experiments on real-world and synthetic underwater images and videos demonstrate that our method generalizes well to different underwater scenes.

Paper:

Chongyi Li, Saeed Anwar, and Fatih Porikli

Underwater scene prior inspired deep underwater image and video enhancement.

[PDF-offical version] [PDF--Arxiv version]

Results:

10 Types of Synthesized Underwater Image Datasets:

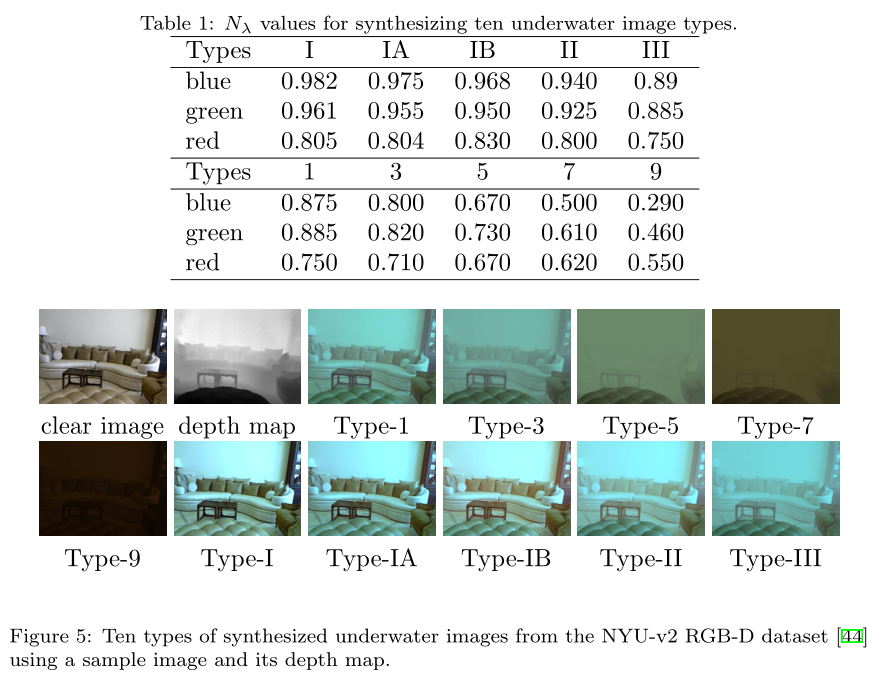

To synthesize underwater image degradation datasets, we use the attenuation coefficients described in Table 1 for the different water types of oceanic and coastal classes (i.e., I, IA, IB, II, and III for open ocean waters, and 1, 3, 5, 7, and 9 for coastal waters). Type-I is the clearest and Type-III is the most turbid open ocean water. Similarly, for coastal waters, Type-1 is the clearest and Type-9 is the most turbid. We apply Eqs (1) and (2) (please check the paper) to build ten types of underwater image datasets by using the RGB-D NYU-v2 indoor dataset which consists of 1449 images. To improve the quality of datasets, we crop the original size (480*640) of NYU-v2 to 460*620.

This dataset is for non-commercial use only.

[Type-I] [Baidu Cloud Link][Google Drive Link]

[Type-IA] [Baidu Cloud Link][Google Drive Link]

[Type-IB] [Baidu Cloud Link][Google Drive Link]

[Type-II] [Baidu Cloud Link][Google Drive Link]

[Type-III] [Baidu Cloud Link][Google Drive Link]

[Type-1] [Baidu Cloud Link][Google Drive Link]

[Type-3] [Baidu Cloud Link][Google Drive Link]

[Type-5] [Baidu Cloud Link][Google Drive Link]

[Type-7] [Baidu Cloud Link][Google Drive Link]

[Type-9] [Baidu Cloud Link][Google Drive Link]

If you use these datasets, please cite the related papers. Thanks.

C. Li, S. Anwar, and F. Porikli, “Underwater scene prior inspired deep underwater image and video enhancement,” Pattern Recognition, vol. 98, pp.1-11, 2019.

N. Silberman, D. Hoiem, and P. Fergus, “Indoor segmentation and support inference from rgbd images,” ECCV, pp.746-760, 2012.

If you use this code, please cite the related paper. Thanks.

How to generate 10 Types of Synthesized Underwater Image Datasets by using your RGB-D images?

We provide our matlab code that can generate 10 types of synthesized underwater image datasets. For your convenient, we take the RGB-D NYU-v2 indoor dataset as an example in our matlab code.

[Matlab] [Baidu Cloud Link: password 1234][Google Drive Link]

[NYU-v2 indoor dataset] [Baidu Cloud Link: password 1234][Google Drive Link]